These days Menace Searching is a very talked-about time period within the infosec group. Nevertheless, there's not a broadly shared definition of that function. Discrepancies persist as everybody considers their very own implementation as the fitting technique to do it. However, though the sector has but to agree formally on what precisely entails to be a Menace Hunter, and which is their scope of motion, there are some points by which consensus has been reached.

First, Menace Searching has an implicit proactive nature that doesn't share with the standard cybersecurity defence roles. Corporations was restricted to take all of the preventive and reactive actions obtainable to guard their infrastructure and hope for the most effective: avoiding being compromised or at the least having the ability to mitigate the injury when it will definitely occurred. The determine of the Menace Hunter stems from that want of taking proactive measures on the defensive facet to maintain up with the eternal improve in amount and class of cybersecurity assaults which were noticed within the past years.

Its look modifications the basic recreation of Menace Actor vs. Sufferer Firm, as we now have a brand new participant on the board: the Hunter; whose function requires having a deep offensive information that's, for the primary time, utilized to be one step forward of the Menace Actor. That information relies on researching state-of-the-art assaults and dissecting how they work to extract the understanding of how the present adversaries assume and that are their go-to strategies. With this new participant on the board, the standard sufferer features its personal offensive-driven guardian, an individual who makes use of their offensive information to detect and cease incidents earlier than they turn out to be unmanageable.

Till now, we've got established the bottom of what a Menace Hunter is to a stage that a lot of the sector that gives a Menace Searching service ought to agree on. Now we'll go additional into the specifics of what does our personal idea of Menace Searching embody.

Though we expect a Menace Hunter should have a transversal skillset that permits them to be self-sufficient in the entire areas by which an attacker might go away traces, our service does most of our looking duties taking the attitude of the endpoint, and our weapon of selection is the EDR/XDR.

Day-after-day we assume the speculation that each one our purchasers have been one way or the other compromised. To refute so, we question their infrastructure within the seek for proof with a whole lot of queries that search for particular strategies that an adversary might have used to get by way of the consumer’s perimeter. These queries are the results of steady researching, and they're thought to search out not solely strategies which were seen earlier than within the wild utilized by APTs, but additionally novel strategies that may very well be used even when they haven’t been but.

After we run our queries, we undergo each match and discard false positives. To carry out this evaluation, we use the telemetry fed to the EDR/XDR by the endpoints. Though each EDR/XDR has its particularities, the entire ones that we homologate have the options to offer us with solutions to the questions that we elevate to guage the match. Lastly, it’s solely after we are certain that not one of the outcomes are associated to malicious behaviour that we rule out the speculation of compromise.

Approaching the creation of an EDR evaluation methodology

Relating to what makes an «EDR» to be an EDR, and that are its core options, we discover but once more a range of standards. On this case, it's not a lot due to a scarcity of consensus within the infosec group itself, however due to distributors, who usually attempt to tag their safety options with the «EDR» label to reinforce the enchantment of their product, even when it doesn’t meet essentially the most primary standards of what an EDR ought to do.

We already said that the EDR/XDR is our weapon of selection. As an impartial supplier, we try to be know-how agnostic and to mix in with heterogeneous environments as easily as doable. Subsequently, is a should for us to know and hold observe of the options that may assist our Menace Searching Mannequin, those which might be incompatible, and the evolution of each teams over time. For formalizing that course of and make it scalable, we determined to create a customized EDR evaluation methodology.

Cutting-edge of EDR evaluation

We approached the duty from the attitude of our personal broad expertise in offering a high-quality Menace Searching service. This has entailed working with a number of EDR options through the years, every one with its execs and cons. Nevertheless, researching can be wanted to ascertain a baseline of how the group has been evaluating EDRs to date, examine if any of their approaches swimsuit our Menace Searching Mannequin, and keep away from duplication in case that there have been already ongoing tasks that met our necessities. Throughout this investigation, we discovered that the present development in EDR evaluation has roughly two branches.

Technical evaluation tasks

This sort of evaluations are centered on the uncooked detection capabilities of the EDRs. Having an excellent vary of detections is for sure a key function of any answer that goals to name itself an EDR. Nevertheless, we determined this sort of evaluations is sufficient and needs to be complemented with practical ones. That's as a result of even when an EDR has the most effective detection fee, if it doesn’t retrieve telemetry that we will use to reject our compromise speculation, or if it doesn’t have the fundamental options to reply to an incident, the Menace Searching service might be restricted.

On the opposite facet, an EDR with much less detection vary however extra in tune with our Menace Searching mannequin may very well be enhanced by our intensive information base and turn out to be a viable choice.

On this class, we want to spotlight the MITRE Engenuity ATT&CK Evaluations challenge, that gives a per 12 months evaluation of detection capabilities of a number of EDRs options that apply to show their efficacy. We used these evaluations as one scoring issue for or personal EDR evaluation methodology.

Purposeful evaluation tasks

These tasks prioritize the evaluation of the amount of the telemetry supplied by EDR. On this class, we additionally discovered exceptional tasks. We want to make a particular point out to the EDR Telemetry Project. This challenge was created to «evaluate and consider the telemetry potential from these instruments whereas encouraging EDR distributors to be extra clear in regards to the telemetry options they do present to their customers and prospects».

They supplied for us a really worthwhile guidelines of the sources of telemetry that totally different EDR options make use of, and consequently the protection that we will count on from them. Though this challenge is nearer to the method that we need to observe for our customized methodology, it doesn’t utterly align with our wants. Nevertheless, we ended up utilizing it as a information to resolve which new EDR candidates might be value evaluating with our customized methodology.

Though our EDR evaluation methodology additionally evaluates the telemetry supplied by an EDR, we focus extra on the concrete knowledge recovered from every knowledge supply, ensuring that the data gathered has a high quality excessive sufficient for us to implement our looking queries. Therefore, we created a symbiotic relationship between our methodology and this challenge an averted duplicating de nice work that they're already doing.

Though any of the present tasks match our personal very particular wants, they laid a robust basis and gave us a place to begin to work.

As soon as the analysis of the state-of-the-art part ended, we began to outline the customized elements of our EDR evaluation methodology. The definition of those entails a lot of the work of this challenge. It entails formalising the entire set of options that an EDR ought to have carried out to maintain up with our high quality normal for performing Menace Searching.

The collection of these options is synthesized from our personal technical experience, acquired over years of offering a Menace Searching service. Word that since our service is know-how agnostic, and we've got labored and evaluated a number of EDR applied sciences through the years, this function guidelines may very well be thought-about a compilation of all of the exceptional points of the totally different EDR options that we've got confronted till this second.

As one closing observe earlier than diving into the sections by which we categorised these options, you will need to make clear that this EDR evaluation methodology is meant to be utilized on the premises that we've got, for any EDR:

- Entry to the EDR documentation.

- Entry to an actual atmosphere with the EDR deployed so we will check and confirm options.

- Entry to the EDR’s assist workforce, as typically the shortage of documentation leaves uncertainty within the extent of attain of the EDR in some areas which might be arduous to breed in an actual atmosphere.

With out these three components, we don’t assume that the evaluation could be a consultant reflection of the standard of the answer and the way it might work together with our Menace Searching mannequin.

Telemetry

On this part we concentrate on evaluating if the telemetry obtainable is sufficient in amount and high quality for performing our Menace Searching mannequin. That makes this part specifically dependant on our method of performing Menace Searching. Nevertheless, we attempt to be as verbose as doable in our evaluations, together with feedback that justify the scores that we give to every function. In that sense, we imagine that this telemetry part may very well be probably the most helpful ones for different service suppliers, as all of us analyse telemetry in a method or one other.

Two of the options that we think about most necessary and value explaining on this part are the TTA (Time for the Telemetry to Arrive) and TTL (Time that telemetry Lives, i.e. is on the market within the EDR).

TTA exists as a result of we have to make certain that within the case that we discover any hint of suspicious exercise, we will ask the telemetry in actual time all of the questions that we have to resolve the case. If the telemetry takes, let’s say, quarter-hour to reach, then we might be at the least quarter-hour at behind of the adversaries at any given second. This may very well be seen as not a lot of a time lapse, however in an actual time incident it might imply the distinction between an early mitigation and a full compromise.

The TTL can be a really worthwhile function for us. To detect suspicious behaviour, the very first thing we received to know is how regular behaviour appears to be like like within the atmosphere. Once we begin working with a consumer, we will’t instantly study the best way their atmosphere works, as that comes with time. So having previous telemetry obtainable will help us to make up for that lack of context. This function additionally considers limitations in telemetry storage, akin to:

- Some EDR know-how will cease storing telemetry from an endpoint after sure storage threshold has been met. This sort of limitations might result in potential Denial of Service assaults, the place an adversary might generate telemetry associated to non-suspicious exercise as much as the purpose that the EDR stops storing, after which carry out their operations whereas we're blinded.

- Some EDR know-how have telemetry fashions based mostly on storing on the cloud solely a partial quantity of data and permitting the analyst to question the units to acquire additional context. In these circumstances, offline machines imply shedding entry to their telemetry.

Fig 1. Consultant pattern of evaluable telemetry options

Question Language

We're all the time open to use new question languages from new EDR options. Nevertheless, we've got necessities that each answer and its question language should meet. First, the EDR should permit to question the telemetry. If it does, then its language have to be potent sufficient for us to have the ability to create looking guidelines and adapt all our menace information to the brand new language. The dearth of a correct implementation of a few of the options on this part may very well be a constraint to carry out investigations with that answer when indicators of suspicious exercise seem, because the questions that we launch towards telemetry require a sure stage of energy.

Fig 2. Consultant pattern of evaluable question language options

Administrative instruments

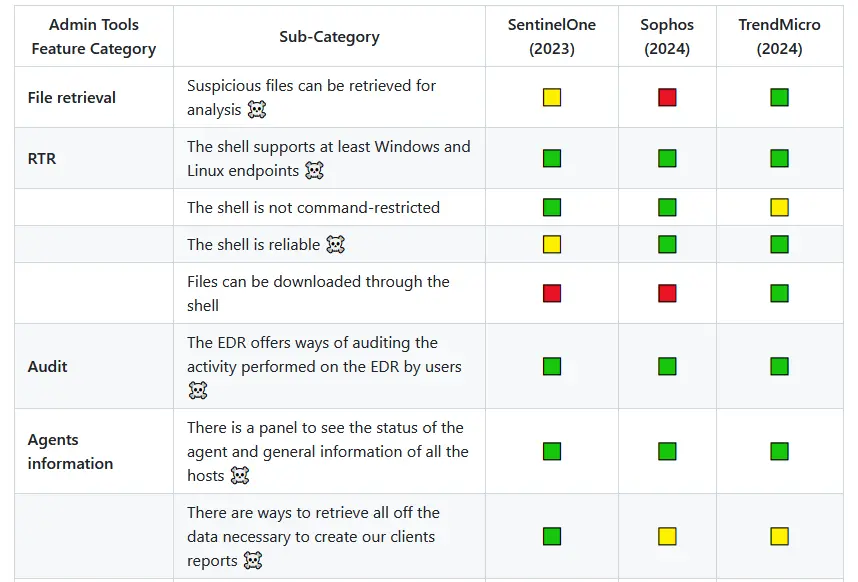

This part encompasses all of the administrative instruments options that we have to have obtainable in a EDR answer. From the implementation of audit logs to the safety insurance policies implementation and their flexibility, we consider a number of classes of options.

Options

On this part we've got normal options not so associated to the executive a part of the EDR, however extra particularly with the day-to-day use of the answer to carry out our duties. Therefore, we might be discovering options associated to, for instance, the implementation of artifact retrieval mechanisms, the pliability to ascertain exclusions pr how response and mitigation actions could be utilized, amongst many others.

Fig 4. Consultant pattern of evaluable options

API

By the point that the evaluation arrives to this part, we have already got an concept of the EDR’s efficiency, it’s robust factors and its shortcomings. On this part we search for how a lot of its potential is on the market by way of the API, which can allow or constraint the sustainability of the service with a given EDR answer.

Fig 5. Consultant pattern of evaluable API options

UI

Whereas it's not crucial part, the UI part shouldn’t be underestimated. A Menace Hunter should be capable to act and transfer easily by way of the EDR’s panels throughout an investigation. In that sense we aren't speaking in regards to the Menace Hunter’s «consolation», however about how the UI can have a optimistic or detrimental affect in relation to minimise the time of research.

Fig 6. Consultant pattern of evaluable person interface options

MITRE Engenuity

Now we have built-in the MITRE Engenuity outcomes into our scoring system. Extra particularly, we use the detections that each EDR was capable of generate with out making any modifications for every of MITRE’s workout routines. This offers us an concept of the uncooked detection functionality of the EDR in relation to detect malicious behaviour, and the way it has been evolving through the years.

Fig 7. Representatiove pattern of evaluable MITRE Engenuity campaigns

Conclusions

On this final part we've got a summarized model of each different part for every EDR evaluation, along with a conclusion of how appropriate that answer is with our mannequin of Menace Searching. Word that even though we've got an empiric scoring system, the ultimate conclusions are closely influenced by the interpretation that the hunters make of that empiric scoring. On this part a merge the uncooked knowledge that tells us the professionals and cons of the answer evaluated, and the professional opinion of the workforce to generate a closing conclusion that will conclude if the EDR in its present state is appropriate to a higher or lesser extent for deploying a Menace Searching service that hols our high quality requirements.

It's value mentioning that this methodology is meant to be utilized periodically on the options. By doing so we'll know not solely about their present state, however about how are they evolving and by which course.

Fig 8. Consultant pattern of high-level evaluation outcomes in response to evaluable classes

Challenges of EDR evaluation

Defining a NO-GO function

We're conscious that a few of the options that we require in an EDR answer are extra necessary than others. Subsequently, we want to have the ability to differentiate these options that we think about that play a central function for deploying our Menace Searching service. We name them NO-GO options.

The dearth of those options in an EDR answer is taken into account as a big lack of maturity within the answer, therefore that answer will most likely not be homologated as sufficiently appropriate for our Menace Searching mannequin.

Word that a part of these evaluations’ goal is to share why we lean in the direction of sure options as a substitute of others in a deterministic and empirical manner, however by no means can we search to make our evaluation a know-how guillotine. We don't rule out utilizing an answer that hasn’t been homologated, so long as we could be clear about its limitations and they're acceptable to our prospects.

Lack of documentation or cooperation by assist

Throughout a few of the first evaluations carried out it has turn out to be obvious that some EDR options have a richer documentation than others. Likewise, coping with the assist groups of the merchandise could be a fairly tedious activity typically.

The primary ranges of assist usually perform as a sort of redirector to the obtainable documentation, even when the ticket is exactly in regards to the lack of publicly obtainable documentation. Then, if you're fortunate sufficient to get your ticket escalated, they don't seem to be all the time cooperative in relation to give solutions in regards to the infrastructure or present info that's not already printed.

However, we should additionally admit that in a few of the evaluations, we've got additionally encountered very cooperative assist groups (though it's not the commonest case).

Defining a scoring system

Our EDR evaluation methodology makes use of a number of inputs to arrive on the closing conclusion of whether or not or not an EDR answer is homologable with our high quality normal for a Menace Searching service. Particularly, we think about:

- Grade and high quality of implementation of the options that we've got included as fascinating or vital (NO-GO Function) within the methodology. We use a three-colour system to attain this. If a function isn't carried out, we mark it with purple. If a function is partially carried out or has necessary caveats, we mark that function with yellow. Lastly, if a function is carried out in a passable method, we mark that function as inexperienced.

- As we beforehand said, each EDR evaluated must be examined for a adequate time period in a deployed atmosphere by which we will confirm its capabilities. In the course of the hands-on a part of the evaluation of the answer, answering with the three-colour system to the query «Is that this function carried out?» is usually not sufficient. To have the ability to add particulars in regards to the rating given we use notes to convey related info concerning that function implementation, particularly when it has been scored as yellow.

- As soon as the evaluation is full, we carry out a synthesis for every part of options, by which we congregate crucial findings considering the three-color scoring and the notes, to focus on the most effective and worst of the EDR in that concrete part. This info is on the market within the «Conclusions» part.

- On this final step the workforce takes as enter the grading and notes of the options, in addition to their synthesized model created on step 3. With these inputs, the Menace Searching workforce can correctly focus on the evaluation outcomes to succeed in a gaggle consensus about what might be thought-about the ultimate conclusion (i.e. the reply to the query «Is that this EDR appropriate with our high quality normal to deploy our Menace Searching service with out constraints?»).

Sharing the product of our challenge

As mentioned initially of this text, the best way to carry out Menace Searching continues to be topic to debate. Nevertheless, we firmly imagine that our mannequin deploys probably the most full approaches within the sector, making an attempt to higher ourselves every time is feasible and prioritizing offering worth by way of all of our tasks. It's for that purpose that we've got determined to share with you here the implementation of our EDR evaluation methodology with a pattern of outcomes to ease its interpretation.

By sharing this methodology and a few of its outcomes we not solely faux as an example this text, but additionally to reveal the good significance hooked up to choosing an excellent know-how and the way this choice could severely situation the Menace Searching service that may use it.

We hope that this text will function a showcase of how we do issues and the significance we connect to a critical Menace Searching service. We might additionally wish to encourage different researchers in the neighborhood to trade concepts and experiences, in addition to to offer us suggestions that may permit us to additional enhance the challenge.

Comfortable Searching!